About Agenta

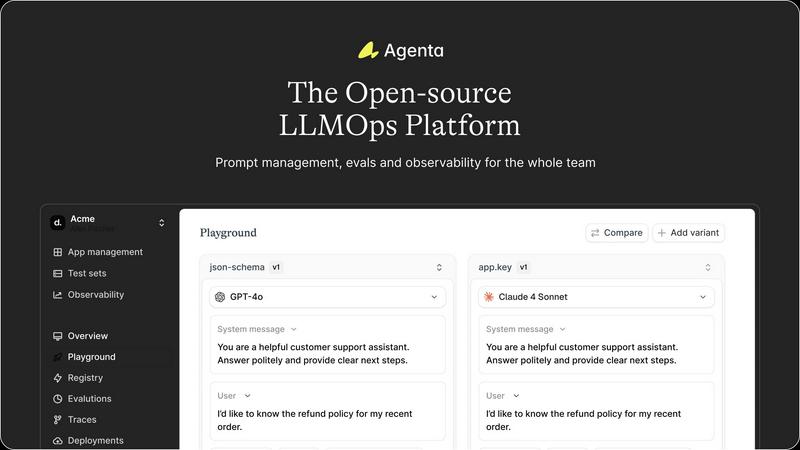

Alright, let's break it down. Agenta is the open-source LLMOps sidekick you didn't know you were missing, built to stop the chaos of shipping AI apps. You know the vibe: prompts are lost in Slack DMs, your PM is vibing with a Google Sheet, your devs are in VS Code, and everyone's just yolo'ing changes to production hoping the LLM doesn't go off the rails. It's a mess. Agenta swoops in as the single source of truth for your whole squad. It's a platform where developers, product managers, and domain experts can actually collaborate without wanting to yeet their laptops. Think of it as your mission control for building reliable LLM applications. You get a unified playground to experiment with prompts and models, tools to run automated evaluations so you're not just guessing what works, and full observability to see what's breaking in production. It's for any AI team that's tired of the scattered workflow and wants to ship faster without the constant fear of a random AI hallucination taking down their feature. The main flex? Turning unpredictable LLM development into a structured, evidence-based process where everyone's on the same page.

Features of Agenta

Unified Playground & Experimentation

This is your central hub for all the prompt tinkering. Stop toggling between a million tabs. Compare different prompts, models, and parameters side-by-side in one clean interface. Found a weird output in production? Just save that trace directly into a test set and start experimenting on it immediately. It keeps a complete version history of all your prompts, so you can always roll back when that "brilliant" idea at 2 AM turns out to be garbage. It's model-agnostic, so you can play with OpenAI, Anthropic, or open-source models without getting locked into one vendor.

Automated Evaluation Suite

Ditch the vibe checks. Agenta lets you build a legit evaluation process that replaces guesswork with cold, hard evidence. You can set up automated evaluations using LLM-as-a-judge, use built-in metrics, or plug in your own custom code as evaluators. The killer feature? You can evaluate the full trace of an agent's reasoning, not just the final output. This means you can pinpoint exactly where in a complex chain-of-thought your agent started cooking up nonsense. You can even integrate human feedback from your domain experts right into the workflow.

Production Observability & Debugging

When your LLM app breaks in production (and it will), debugging doesn't have to be a nightmare. Agenta traces every single request, giving you a detailed map of the entire execution. You can instantly find the exact failure point—was it the retrieval step, the prompt, the model call? You and your team can annotate these traces with notes, or even turn any problematic trace into a test case with one click, closing the feedback loop instantly. Plus, set up live evaluations to monitor performance and catch regressions before your users do.

Collaboration-First Workflow

Agenta smashes the silos. It gives your non-coder product managers and subject matter experts a safe UI where they can edit prompts, run experiments, and compare results without needing to touch a line of code. Everyone can run evaluations and see the data, making decisions based on the same evidence. There's full parity between the UI and the API, so your devs can work programmatically while the rest of the team uses the interface, all synced to the same central hub. No more emailing CSV files back and forth.

Use Cases of Agenta

Building and Tuning Customer Support Agents

Teams building AI support chatbots can use Agenta to systematically test different prompt variations for handling tricky customer queries. They can save real failed interactions from production as test cases, evaluate which prompt version performs best using automated and human-in-the-loop checks, and continuously monitor the live agent for any drop in response quality or helpfulness.

Developing Reliable Content Generation Workflows

Marketing and content teams creating blogs, social posts, or product descriptions with LLMs can centralize their prompt library. They can A/B test different creative directions, use evaluators to check for brand voice consistency and factual accuracy, and ensure that new prompt "improvements" actually generate better content before shipping them to their live tools.

Debugging Complex AI Agent Pipelines

When you have a multi-step agent that does retrieval, reasoning, and tool calling, failures are complex. Developers can use Agenta's full trace observability to see the exact step where the logic derailed. They can isolate the problematic trace, convert it into a reproducible test in the playground, and iterate on the prompt or logic until the agent handles that edge case correctly.

Managing LLM App Development Across Teams

For larger organizations, Agenta acts as the collaboration layer between engineering, product, and domain experts (like legal or medical professionals). Product managers can define evaluation criteria, experts can review outputs and provide feedback directly on traces, and engineers can implement changes—all within a shared platform with version history, ensuring alignment and auditability.

Frequently Asked Questions

Is Agenta really open-source?

Yes, for real! The core Agenta platform is fully open-source (check it out on GitHub). You can self-host it, dive into the code, and even contribute to it. This means no vendor lock-in, full transparency, and the ability to customize it to fit your stack perfectly. They have a vibrant community on Slack where builders hang out.

How does it work with my existing LLM frameworks?

Agenta is designed to be super flexible. It seamlessly integrates with popular frameworks like LangChain and LlamaIndex. It's also model-agnostic, so whether you're using OpenAI's GPT-4, Anthropic's Claude, or open-source models from Hugging Face, you can plug them right in. You can use the Agenta SDK with your existing code or start fresh.

Can non-technical team members really use this?

Absolutely! A major goal of Agenta is to break down walls. The web UI is built for product managers, operations folks, and subject matter experts. They can safely experiment with prompts in a no-code playground, set up and view evaluation results, and annotate production traces to provide feedback—all without writing a single line of Python.

What's the difference between Agenta and just using LangChain?

LangChain is a fantastic framework for building the LLM application logic (chains, agents, etc.). Agenta is an operations and collaboration platform for what comes next: managing the prompts you build with LangChain, evaluating their performance, debugging them when they fail, and collaborating with your team on improvements. They work together—you build with LangChain, and you manage the lifecycle with Agenta.

You may also like:

Blueberry

Blueberry is a Mac app that combines your editor, terminal, and browser in one workspace. Connect Claude, Codex, or any model and it sees everything.

Anti Tempmail

Transparent email intelligence verification API for Product, Growth, and Risk teams

My Deepseek API

Affordable, Reliable, Flexible - Deepseek API for All Your Needs